Hello !

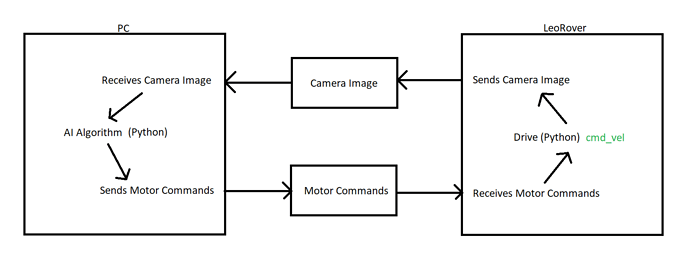

I’m currently trying to develop an AI application on the LeoRover that would look like this:

I need to be able to access the live camera stream, take the current frame to send it in my AI processing and then send the motor commands back to Leo. The motor commands are the output of my AI algorithm.

For now, here is what I have:

- On the computer side, I access the video stream as follow

cap = cv2.VideoCapture(‘http://10.0.0.1:8080/stream?topic=/camera/image_raw&type=ros_compressed’)

ret, frame = cap.read()

cap.release() - I then generate the motor commands vector that looks like [0, 0], [0.1, 0.1], etc.

- I send these values back to Leo with a socket

- I publish the values on the cmd_vel node

- Loop from 1

The client/server approach seems to work fine most of the times, but once in a while the camera node just disconnects and I lose the image.

My first question is: Is there a better approach for this application?

My second question is: Is there a way to access the image node directly from my PC (I’m connected on Leo’s wifi)?

Thanks for your help and let me know if you need more information.

Note: I’m not trying to make you do the work for me, I’m looking for pointers on how to develop a robust solution for my problem !